Automated User Interface Testing That Delivers Results

Automated UI testing is all about using software to act like a real person. It simulates clicks, scrolls, and typing to make sure an application's visual parts work exactly as they should. This whole process replaces the slow, repetitive, and frankly boring manual checks with fast, precise scripts.

What you get is a consistent user experience with every single code change. Think of it as the final quality gate that confirms your UI doesn't just work, but works reliably for everyone, every time.

Why Automated UI Testing Is No Longer Optional

In the world of rapid development cycles, sticking to manual UI testing is a surefire way to hit delays and let bugs slip through. Manual checks are not just sluggish; they're susceptible to human error and just can't keep up with the endless matrix of devices, browsers, and screen sizes your users have.

Automated UI testing tackles these problems head-on, which is why it's become a cornerstone of modern software delivery.

This isn't just about a small efficiency bump; it's a strategic necessity. The global market for automated software testing was valued at around USD 84.71 billion and is expected to explode to USD 284.73 billion by 2032. That kind of growth says it all—the industry is banking on automation to deliver higher-quality software, faster. If you're curious, you can dig into the numbers in Intel Market Research's analysis of automated software testing growth.

The Real-World Benefits for Your Team

When you automate the predictable, mind-numbing checks, you free up your QA engineers to do what people do best: complex, exploratory testing that requires intuition and critical thinking. Instead of clicking through the same login form for the tenth time today, they can hunt for subtle usability issues and investigate tricky user workflows that a script would never catch.

Here are the immediate wins you'll notice:

- Accelerated Feedback Loops: Developers get feedback in minutes, not hours or days. They can fix UI bugs while the code is still fresh in their minds, stopping small issues from turning into big ones.

- Massive Test Coverage: Automation makes it practical to test your app across tons of different configurations. You can check functionality on Chrome, Firefox, and Safari, on both desktop and mobile resolutions, with every single commit.

- Improved Accuracy and Consistency: Automated tests follow the exact same steps, in the exact same way, every single time. This kills the "it worked on my machine" excuse and gives you a reliable baseline for quality.

By integrating automated UI testing, you're not just finding bugs faster. You are building a safety net that gives your team the confidence to ship features quickly and frequently without fear of breaking the user experience.

Ultimately, this shift is about building a more resilient, efficient, and innovative engineering culture. It moves quality assurance from being a rushed, final step to being a core part of the development process itself.

Choosing Your UI Testing Framework Wisely

Picking the right UI testing framework is one of those foundational decisions that will stick with your project for years. It’s easy to get lost comparing features between big names like Cypress, Playwright, and the venerable Selenium. But honestly, the best choice isn’t about who has the longest feature list. It's about what fits your team, your app, and your sanity down the road.

Before you even open a single documentation page, take a hard look at your team. If your devs are JavaScript wizards, grabbing a framework like Playwright or Cypress is a no-brainer. They can jump right in and write tests in a language they already use every day. This dramatically lowers the barrier to entry and actually encourages more people to contribute to the test suite. On the other hand, if your team has deep Java roots, Selenium might feel more like home.

Key Factors Beyond Language

Your application's tech stack is another huge piece of the puzzle. Modern front-end frameworks like React and Vue are known for their complex state management and asynchronous rendering. You absolutely need a tool that can handle that without forcing you to write brittle, convoluted workarounds.

Here are a few other things I always consider:

- Setup Friction: How fast can a new developer get up and running? If it takes half a day to write a "hello world" test, you're going to lose people before they even start.

- Execution Speed: Faster tests mean faster feedback. Look for tools that support parallel execution out of the box. Slow CI pipelines are a developer's worst enemy.

- Debugging Experience: When a test inevitably fails—and it will—how painful is it to find out why? Features like time-travel debugging, automatic screenshots, and video recordings are lifesavers.

The goal isn’t just to find a tool that can click buttons. You're looking for something that makes writing, debugging, and maintaining tests a smooth part of your workflow, not a chore. A healthy community and clear documentation are non-negotiable.

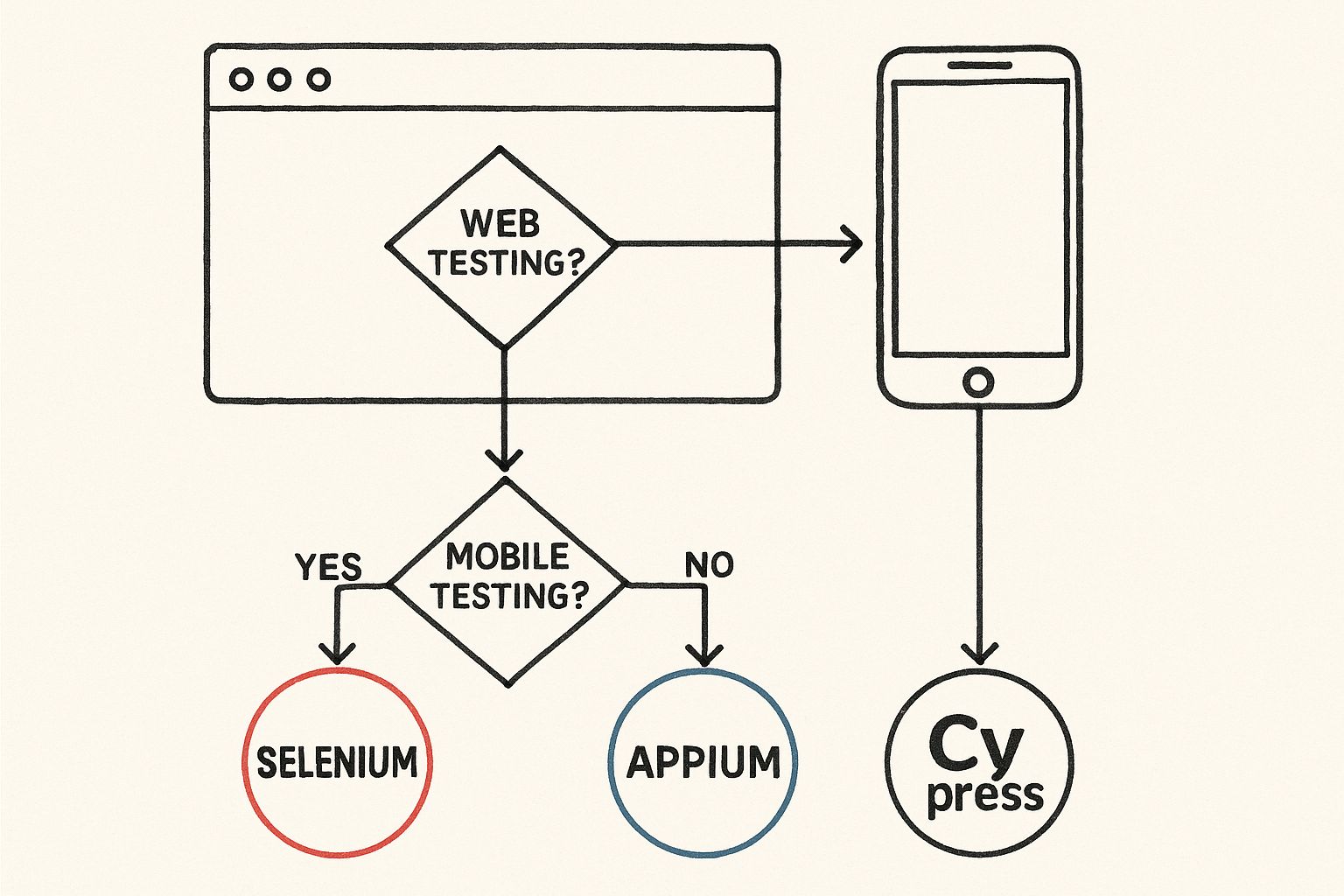

This decision tree gives you a simplified path to follow, starting with the most basic question: what kind of app are you even testing?

As you can see, the platform—web, mobile, or desktop—is your first big filter. It immediately narrows down the options to a more manageable list.

A Quick Framework Showdown

To help you get a clearer picture, I've put together a simple comparison of some of the most popular frameworks I've worked with. This isn't exhaustive, but it covers the highlights.

UI Testing Framework Comparison

| Framework | Primary Language | Best For | Key Advantage |

|---|---|---|---|

| Cypress | JavaScript | Modern web apps (React, Vue) | All-in-one testing experience with excellent debugging tools. |

| Playwright | JavaScript, TypeScript | Cross-browser testing on modern web apps | Blazing fast execution and superior browser automation APIs. |

| Selenium | Java, Python, C#, etc. | Large, enterprise-level projects | Massive language support and the largest community. |

| Appium | Java, Python, JS, etc. | Native and hybrid mobile apps | The de-facto standard for mobile testing, built on Selenium's WebDriver. |

This table should give you a starting point. Dig into the two or three that seem like the best fit for your team and project needs. The "best" framework is always the one that your team will actually use.

How to Write Stable and Maintainable UI Tests

Anyone can write an automated UI test. That’s the easy part. The real challenge? Crafting tests that don’t completely fall apart the second a developer tweaks a CSS class. Brittle, flaky tests are a maintenance nightmare and, worse, they kill your team's trust in the entire automation suite.

The secret is to build for resilience right from the start.

It all begins with how you find elements on the page. If you're relying on things like CSS classes or XPath, you're setting yourself up for failure. Those selectors are tied directly to styling and structure, which are constantly changing. Instead, your first rule should be to use selectors built specifically for testing.

Prioritize Resilient Selectors

The gold standard here is a dedicated test attribute like data-testid. These attributes have one job and one job only: to be a stable hook for your tests. They have nothing to do with styling, structure, or content, which means your tests won't break just because of a simple redesign.

- Fragile Selector:

cy.get('.btn-primary.submit-button') - Stable Selector:

cy.get('[data-testid="login-submit-button"]')

Getting your development team to add these attributes is a tiny bit of upfront work that pays off enormously in test stability. Honestly, it’s a non-negotiable first step for any serious UI testing strategy.

Decouple Logic with the Page Object Model

As your test suite grows, you'll start noticing a lot of repetition. You're using the same selectors and the same sequences of actions over and over again. If a selector for the login button changes, you might have to hunt it down and update it in dozens of different test files. This is exactly where the Page Object Model (POM) saves the day.

POM is a design pattern that creates a clean separation between your test logic and the nitty-gritty details of UI interaction. You simply create a class for each page or major component in your app. This class holds all the selectors and methods for interacting with that specific piece of the UI.

Your test scripts should read like a user story, not a long string of technical commands. The Page Object Model abstracts away the "how" (clicking a data-testid) so you can focus on the "what" (logging in a user).This approach keeps your selectors centralized. When the UI changes, you only have to make an update in one place: the relevant page object. Your tests immediately become cleaner, more readable, and so much easier to maintain. For a deeper dive into structuring your tests, check out our guide on automated GUI tests for more patterns and best practices.

Handle Asynchronicity and Test Independence

Modern web apps are incredibly dynamic. Content loads, animations play out, and network requests finish at their own pace. One of the biggest reasons for flaky tests is failing to properly account for these asynchronous operations.

Whatever you do, never use hard-coded waits like sleep(3000). It's a terrible practice. This either grinds your tests to a halt for no reason or, even worse, fails intermittently when the app takes just a fraction of a second longer to respond.

The right way is to use explicit waits that check for a specific condition before moving on:

- Wait for an element to be visible: Make sure the element is actually on the page before you try to click it.

- Wait for an element to be clickable: Confirm it isn’t disabled or hidden behind a loading spinner.

- Wait for a network request to complete: You can even intercept API calls and wait for their response before checking the UI state.

Finally, every single test must be completely independent. Each test needs to set up its own state and clean up after itself. Never, ever let one test rely on the outcome of another. This modular approach is what allows you to run tests in parallel and makes debugging failures a straightforward process, not an archaeological dig through cascading effects.

Weaving UI Tests into Your CI/CD Pipeline

Writing automated UI tests is a great start, but let's be honest—if they aren't running automatically on every single code change, you're leaving most of their value on the table. Tests that need someone to manually kick them off are just a missed opportunity. The real magic happens when you integrate them into your Continuous Integration/Continuous Deployment (CI/CD) pipeline, turning them from a periodic spot-check into a full-time quality gatekeeper for your app.

This means setting up your pipeline—whether it's on GitHub Actions, Jenkins, or another platform—to fire off your entire UI test suite for every new pull request. When you get this right, a failing test becomes a hard blocker. No more buggy code slipping into your main branch. It's hands-down the most effective way to catch visual regressions and usability quirks before they ever see the light of day.

Setting Up Your Workflow

The basic idea is straightforward. Your CI workflow file, something like a .github/workflows/ui-tests.yml, needs a job that triggers whenever a pull request is opened or updated. This job will check out the code, install all the dependencies, build your application, and then run your test command (think npm run test:ui).

This simple setup provides immediate, actionable feedback right inside the pull request. A developer can see if their changes broke a critical user flow in minutes, not days. They can jump on a fix while the context is still fresh in their mind. If you want a deeper dive into configuring these kinds of workflows, our guide on automated Playwright testing walks through it in detail.

A CI/CD pipeline that automatically runs UI tests is your safety net. It gives your team the confidence to ship features faster because they know a core set of user-facing functionality is always being verified.

The need for this level of rigor is growing, especially with the explosion of smart consumer electronics that demand flawless UI for seamless interaction. It’s also fueled by methodologies like DevOps, which depend on rapid iteration and, by extension, robust test automation. For a closer look at this trend, you can find more insights on the growth of the automation testing market.

Smartly Managing Your CI Runs

Here’s a practical problem: running a full UI test suite on every single commit can get expensive and slow, fast. For active projects with developers pushing changes constantly, you can quickly create a bottleneck where everyone is just waiting for CI results. Even worse, you risk running tests on slightly outdated code. This is where a merge queue becomes your best friend.

A tool like Mergify takes the chaos out of the process. Instead of mindlessly running tests on every commit, it creates a merge queue that intelligently batches pull requests together.

Here’s how it makes everything better:

- Sequential Testing: It lines up pull requests and tests them one by one against the most current version of your main branch. This approach all but eliminates gnarly merge conflicts.

- Cost Efficiency: By batching multiple PRs into a single test run, you can slash your CI minutes and the costs that come with them. It's a huge win for your budget.

- Branch Protections: You can set hard-and-fast rules that require UI tests to pass before any code gets merged. This makes your pipeline a true quality gate, not just a friendly suggestion.

This setup ensures your main branch is always stable and ready to deploy. It’s the perfect balance between the need for thorough automated user interface testing and the real-world pressures of cost and developer productivity, making your CI/CD process both powerful and sustainable.

The Future of UI Testing with AI

The world of test automation is getting smarter, with artificial intelligence leading the charge. While the core principles of automated user interface testing we've covered are solid, AI and machine learning are finally starting to tackle some of the oldest, most stubborn challenges in this space—especially around test maintenance and coverage.

This isn't just some far-off, sci-fi concept. It's the very real, practical next step for quality assurance.

Imagine a test suite that doesn’t shatter every time a developer refactors a component. That’s the promise of self-healing tests. Using AI, these systems can figure out when a UI element—like a button or a form field—has changed and automatically update the test script to find the new selector. This is a game-changer that slashes the time engineers spend fixing brittle tests.

AI-Powered Visual and Functional Analysis

Beyond just fixing what's broken, AI is changing how we even find bugs in the first place.

AI-driven visual regression tools are worlds beyond simple pixel-to-pixel comparisons. They can intelligently spot meaningful visual defects—like a misaligned logo or overlapping text—while ignoring the insignificant rendering noise that would normally flood you with false positives. This is what makes visual testing actually practical at scale.

What's more, AI can analyze user behavior from your production data to automatically generate new, relevant test cases. By learning the most common user journeys and tricky edge cases, these tools can build tests that cover how people actually use your application, not just how developers think they use it.

The real magic of AI here is its ability to handle the dynamic, ever-changing nature of modern user interfaces. It shifts the focus from manually babysitting rigid scripts to building an intelligent system that adapts right alongside your application.

The Impact on DevOps and CI/CD

AI and machine learning turbocharge UI testing by enabling fast test case generation, predicting bug-prone areas, and creating self-maintaining scripts. All this works to significantly cut down on manual effort and time. Since UI testing is so critical for user experience, embedding AI-driven automation directly aligns with the market's demand for faster, more reliable software delivery.

This intelligence plugs directly into the development workflow. For instance, some platforms can now predict which tests are most likely to fail based on the specific code changes in a pull request. This allows your CI pipeline to run a smaller, highly targeted subset of tests, giving you much faster feedback.

This intersection of smart automation and workflow optimization is a key area to watch. If you're curious about how this applies more broadly, our article on AI for DevOps dives deeper into the topic. Getting ready for this shift means embracing tools that don't just run tests, but actually learn from them.

Even when you've got a solid plan, jumping into automated user interface testing can feel like navigating a minefield of questions. Let's walk through some of the most common hurdles teams face when they're getting started or trying to scale up. Getting these concepts right is the difference between a test suite that saves you time and one that just creates more headaches.

UI Testing vs. End-to-End Testing

One of the first things that trips people up is the line between UI testing and end-to-end (E2E) testing. They often use the same tools, like Cypress or Playwright, but their goals are worlds apart.

Think of it like this: a UI test is a specialist. It’s laser-focused on the visual bits and pieces of a single screen. Does the button look right? Does the dropdown menu actually open when you click it? It’s all about making sure the presentation layer works in isolation.

An end-to-end test, on the other hand, is the generalist. It’s there to validate an entire user journey from start to finish. This almost always involves clicking around the UI, but its real job is to confirm that all the systems—frontend, backend APIs, databases—are playing nicely together.

A UI test checks if the "Submit" button is clickable. An E2E test checks if clicking that button actually completes the purchase and updates the inventory database.

Dealing with Flaky Tests

There's no faster way to kill your team's faith in automation than a flaky test suite. You know the ones—they pass, they fail, they pass again, all without a single code change. A flaky test is honestly worse than no test at all. Taming them requires a multi-pronged attack.

- Ditch Fixed Waits: First rule of fighting flakiness: never use fixed waits like

sleep(2000). Ever. Instead, use explicit waits that tell your test to pause until a specific condition is met, like an element becoming visible. - Create Stable Selectors: As we covered earlier, your best friends are dedicated test IDs like

data-testid. They insulate your tests from a designer's whim or a structural refactor. - Be Smart About Retries: A limited, intelligent retry strategy in your CI pipeline can be a lifesaver for temporary hiccups like a network blip. But this is a crutch, not a fix for a genuinely broken test.

- Treat Flakiness Like a Bug: Don't just rerun the build and hope for the best. When a test gets flaky, put it on the board, assign it, and fix it. Let them pile up, and your test suite becomes useless noise.

A flaky test is a symptom of a deeper problem, either in your app or in your test code. Ignoring it is like taking on technical debt with compound interest—it will eventually bankrupt your entire testing effort.

The Myth of 100 Percent Coverage

Finally, let's talk about the big one: should you shoot for 100% UI test automation coverage?

No. Absolutely not.

This is a common and incredibly expensive mistake. Chasing that magic number forces you to write brittle, high-maintenance tests for trivial edge cases that provide almost no real value. It's a classic case of diminishing returns.

Instead, think 80/20. Pour your automation efforts into the critical 20% of user flows that deliver 80% of your app's value. We're talking about core user journeys—things like logging in, the checkout process, or whatever your product's main feature is.

Leave the weird edge cases and nuanced usability feedback to manual and exploratory testing, where a human's intuition is far more powerful than a script could ever be.

Streamline your CI pipeline and eliminate merge conflicts with Mergify. Our merge queue and branch protection rules ensure your UI tests run efficiently, saving you time and CI costs. Learn more and get started at https://mergify.com.