Unlock Efficiency with ai in automated testing

For years, traditional automated testing has been a bit like a train on a fixed track. It’s incredibly fast and reliable, but the second the landscape changes—even slightly—the whole thing can derail. AI-driven testing, on the other hand, is more like an autonomous vehicle that can see the road ahead and adapt to changes on the fly. It's not just about making testing faster; it's about making it smarter.

The Next Frontier in Software Quality

We've all been there. The standard script-based automation has served us well, especially for stable applications. But these scripts are notoriously brittle. A tiny UI change, like a developer renaming a button or moving a field, can trigger a domino effect of test failures. Suddenly, your QA team is spending more time fixing broken tests than finding actual bugs in the product. This constant maintenance cycle has become a serious bottleneck in today's fast-paced development world.

This is exactly the problem AI-driven testing is built to solve. It represents a fundamental shift away from simple "do this, then do that" scripts toward a more intelligent and adaptive approach to quality.

Think of it this way: a traditional test script is like a printed set of turn-by-turn directions. If a road is closed, you're stuck. An AI-powered test is like a GPS that sees the roadblock, instantly calculates a new route, and still gets you to your destination.

This guide will serve as your roadmap for navigating this shift. We'll break down the core ideas behind AI in automated testing, look at how it's being used today, and explore the real-world business benefits it delivers. My goal is to show you why this isn't just another trend, but a strategic move toward building better software, faster.

A Market Poised for Growth

The industry's hunger for more efficient quality assurance is undeniable. As more and more companies embrace Agile and DevOps, the old way of testing just can't keep up. This has fueled explosive growth in the market. The global automation testing market was valued at around USD 35.52 billion and is expected to climb to an incredible USD 169.33 billion by 2034. That's a compound annual growth rate (CAGR) of 16.90%, which clearly signals a massive industry-wide transition. You can explore more data on automation testing market trends.

So, what does this actually mean for your team? Throughout this guide, we’ll cover:

- Core Concepts: How AI actually learns, adapts, and improves testing processes over time.

- Real-World Use Cases: Concrete examples of how companies are already winning with AI.

- Business Benefits: The tangible impact on your team's speed, costs, and overall product quality.

- Implementation Best Practices: A practical look at how to get started without getting overwhelmed.

How AI Is Rewriting the Rules of Testing

To really get what AI in automated testing means, think about hiring a genius QA teammate who learns on the job. You wouldn't give them a rigid, step-by-step script for every single task. You'd expect them to observe, understand the context, and make smart decisions. That’s the leap from old-school scripts to modern AI systems.

Traditional automation is pretty brittle. It relies on fixed "locators"—like a CSS ID or an XPath—to find things on a webpage. The moment a developer tweaks an element's ID, that test breaks. AI, on the other hand, understands the application more like a person does. It recognizes a "login button" by its look, position, and purpose, not just its name.

Visual Validation That Sees Like a User

One of the first things you'll notice with AI is its knack for advanced visual testing. A classic script can check if a button's code exists, but can it tell you if it looks right? AI can. It captures screenshots, compares them against an approved baseline, and flags visual bugs that scripts would completely ignore.

For instance, an AI tool catches the subtle but frustrating issues that often slip through:

- A button that now overlaps another element, making it impossible to click on smaller screens.

- Text that suddenly appears in the wrong font or color, throwing off the entire design.

- An image that doesn't load, leaving behind an ugly broken icon.

These are exactly the kinds of problems that drive users crazy but are nearly invisible to purely code-based checks. AI gives you a tireless set of eyes focused on pixel-perfect consistency.

Self-Healing Tests That Adapt to Change

If you've ever worked with test automation, you know the biggest headache is maintenance. In fact, some studies show developers spend up to 50% of their time just fixing and maintaining tests that break with every minor update. AI tackles this problem head-on with self-healing tests.

Picture this: a developer changes the "Submit" button to "Continue." A traditional script instantly fails because it can't find "Submit." An AI-powered system, however, doesn't panic. It looks at other clues—the button's location, its color, its function in the workflow—and intelligently figures out that "Continue" is the new "Submit." Then it automatically updates the test to reflect the change.

This self-healing capability turns a test failure into a learning opportunity. The system doesn't just break; it adapts, saving countless hours of manual repair and keeping your CI/CD pipeline from grinding to a halt.

Intelligent Test Creation from User Behavior

The most exciting frontier for AI in testing is intelligent test generation. Instead of having engineers manually write every single test case, AI can watch how real people interact with your live application.

By analyzing user sessions, the AI identifies the most common paths, critical workflows, and even edge cases you hadn't considered. It then uses that data to automatically generate new, highly relevant test scripts. This approach ensures your testing efforts are always focused on what your users actually do, bridging the gap between theoretical test scenarios and real-world behavior.

So, when a testing tool claims to be "AI-powered," what does that really mean for your day-to-day work? It's less about a far-off sci-fi concept and more about practical, intelligent features designed to solve the biggest headaches in traditional automation. These are the capabilities that make smarter, faster quality assurance a reality.

At its heart, AI in automated testing isn't about replacing human testers. It's about augmenting them. Instead of just blindly following a script, these tools can observe, adapt, and even reason, turning rote, manual work into a background process. This lets your QA team graduate from being script mechanics to true quality strategists.

Let's dive into what these platforms can actually do.

Autonomous Test Creation

One of the most impressive features is autonomous test creation. Think of an AI model behaving like a curious new user clicking around your application for the first time. It explores every nook and cranny—pushing buttons, trying out forms, and navigating from screen to screen to map out all the possible user journeys.

From that initial exploration, the AI automatically generates a whole suite of test cases. It identifies the most critical workflows on its own, often uncovering user paths your team might have overlooked. This isn't just a minor speed bump; it can shrink the time needed to build a solid regression suite from weeks down to a matter of hours.

Smart Test Maintenance and Self-Healing

We all know the pain of brittle tests. A minor UI tweak breaks a dozen scripts, and suddenly your whole afternoon is gone. This is where AI-driven smart maintenance comes in. When a test fails, the AI doesn't just throw up its hands and report an error—it investigates.

Using machine learning, it can tell the difference between a real bug and a superficial change.

For instance, if a developer changes a button's label from "Submit" to "Continue," or alters its color or CSS ID, traditional scripts would break. An AI, however, understands the element's context and function. It recognizes it as the same button and automatically updates the test script to match. This "self-healing" capability is a lifesaver, keeping false alarms out of your CI/CD pipeline and freeing up your engineers from the endless cycle of script repair.

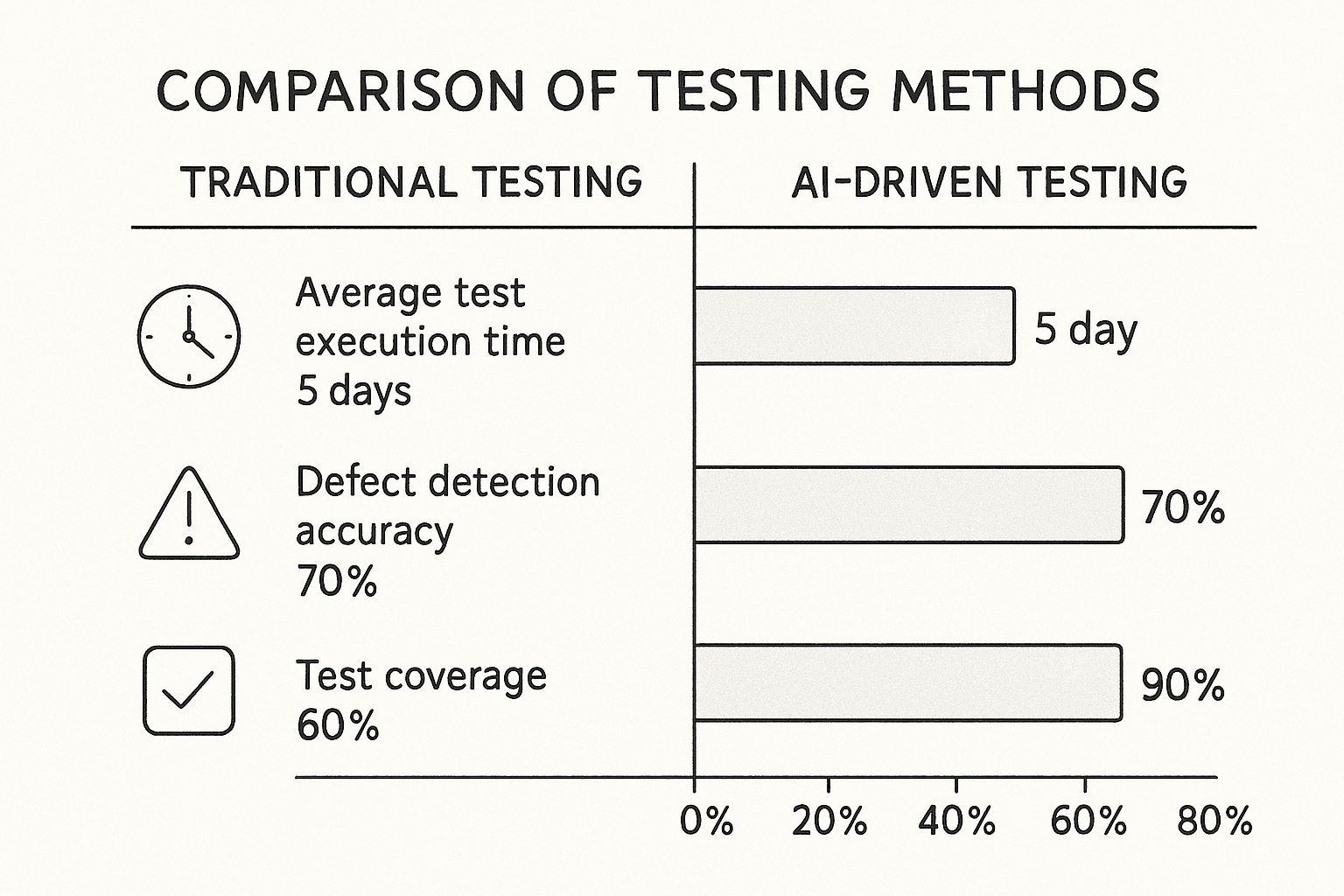

The differences between old and new methods become stark when you compare them side-by-side.

Traditional vs AI-Powered Automated Testing

To really grasp the shift, let's compare the two approaches directly. The following table breaks down how AI changes the game across several key aspects of the testing lifecycle.

| Aspect | Traditional Automated Testing | AI-Powered Automated Testing |

|---|---|---|

| Test Creation | Manual script writing; time-consuming and requires coding expertise. | Autonomous discovery and generation of test cases based on app exploration. |

| Maintenance | Brittle; requires constant manual updates for UI or element changes. | Self-healing tests that automatically adapt to changes, reducing maintenance. |

| Resilience | Highly dependent on specific locators (ID, XPath) that break easily. | Understands the UI contextually, not just by locators, making tests robust. |

| Coverage | Limited by the number of test cases that can be manually written and maintained. | Can generate comprehensive test suites covering more user paths automatically. |

| Analysis | Provides simple pass/fail logs, requiring manual debugging to find the root cause. | Offers detailed root cause analysis, pinpointing the source of failures. |

| Skill Requirement | Requires specialized test automation engineers to write and maintain scripts. | Low-code/no-code interfaces make it accessible to a broader range of team members. |

As you can see, the move to AI isn't just an incremental improvement; it fundamentally redefines what's possible in automated testing.

This infographic really drives the point home, showing just how much of an impact these AI capabilities can have on performance.

The numbers speak for themselves. With AI-driven methods, teams are seeing test execution times drop by up to 80%. At the same time, they're catching more defects and achieving much broader test coverage.

Advanced Root Cause Analysis

Finding a bug is just the first step. The real work is figuring out why it happened. This is another area where AI shines, offering advanced root cause analysis.

When a test run is finished, you get more than a simple pass/fail report. The AI digs deep into the data, analyzing everything from application logs and browser console errors to network requests.

Instead of a vague message like "Checkout test failed," you get a specific, actionable insight: "The checkout test failed because the payment API responded with a 503 error, which correlates with a database connection timeout recorded in the server logs." This level of detail transforms hours of painful debugging into minutes of focused problem-solving. It's these core abilities—creating tests on its own, fixing them when they break, and explaining why they failed—that truly make AI in automated testing such a powerful shift for engineering teams.

Putting AI Testing to Work in the Real World

It's one thing to talk about the theory, but what really matters is how AI in automated testing holds up in the trenches. The good news is that companies are already seeing real, measurable results from putting these smart tools to work.

We’re not just talking about small efficiency gains here. We’re seeing fundamental shifts in how teams approach quality. Let’s walk through three different scenarios where AI is making a huge impact.

Flawless Launches in E-Commerce

Picture a big e-commerce brand gearing up for Black Friday. A smooth user experience is absolutely critical. But with countless combinations of devices and browsers, manually checking every little visual detail is a practical impossibility. This is where AI-powered visual testing really shines.

Instead of just running scripts that confirm a button works, the company uses an AI that actually looks at every page for visual bugs. It's an extra set of eyes that catches the kinds of embarrassing issues that old-school tests would completely miss:

- Promotional banner text that overlaps and becomes unreadable.

- Buttons that look fine on a desktop but are misaligned on a specific iPhone model.

- Product images that appear as broken links instead of loading properly.

By catching these customer-facing glitches before they go live, the AI ensures every shopper gets a polished, professional experience. For a closer look at this topic, our guide on automated user interface testing goes into much more detail. Ultimately, this protects both revenue and brand reputation when it matters most.

Bulletproof Security in Fintech

In the fintech world, the stakes couldn't be higher. Security testing has to be airtight, but manually creating enough realistic and complex transaction data to find every single vulnerability is a monumental task. This is a perfect job for AI-driven test data generation.

A fintech firm can use an AI model trained on patterns from anonymized production data. From that, the AI can generate millions of synthetic but completely plausible user profiles and transaction histories. This lets the team simulate sophisticated fraud scenarios and stress-test their systems in ways that would be far too time-consuming to dream up by hand.

The result is a much stronger security posture. By taking over the creation of high-quality test data, the AI helps uncover subtle vulnerabilities that simpler, manually created data would never expose.

Stability and Speed for SaaS Platforms

Consider a fast-growing SaaS company that pushes out new code several times a day. Their biggest headache? A regression test suite that's constantly breaking. As the UI evolves, their test scripts become a maintenance nightmare, which slows down the entire release cycle.

To solve this, they brought in a testing platform with self-healing capabilities. Now, when a developer changes a button’s ID or restructures a component, the AI understands the intent behind the change. Instead of just failing the test and creating another ticket, it intelligently updates the script’s locators on the fly. This one feature had a massive effect, cutting down their test maintenance effort by over 70%. Their CI/CD pipeline stays green, and developers can ship code confidently, knowing the tests will adapt instead of breaking.

The Strategic Business Value of Smarter Testing

While the tech side of AI in automated testing gets a lot of attention, the real story is what it does for the business's bottom line. Bringing AI into your testing process isn't just an engineering upgrade; it's a financial strategy that turns your QA department from a cost center into a genuine source of value.

This shift lets QA teams break free from the endless cycle of writing and fixing test scripts. Instead of just being script mechanics, they can step into a more strategic role. This frees them up to focus on high-impact work like exploratory testing, deep usability analysis, and really digging into the end-user experience—the kind of work that actually makes the product better and keeps customers happy.

Accelerating Speed to Market

One of the biggest wins you'll see right away is how much faster you can ship. AI tackles the biggest bottlenecks in traditional testing head-on. By automating test creation and using self-healing to handle maintenance, development teams can finally hit the accelerator.

This newfound speed gives you a serious competitive edge. Faster feedback loops mean bugs get squashed earlier in the process, which cuts down development costs. More importantly, it means you can get new features into your customers' hands long before your competitors do.

Enhancing Quality and Coverage

AI-powered testing tools don't just move faster; they're also smarter. They can explore an application on their own, generating tests that cover way more ground than any manual effort possibly could. This broader, deeper coverage means fewer bugs make it into production.

The result is a higher-quality product that builds customer trust and reduces churn. When users encounter fewer issues, satisfaction and retention rates improve, which is crucial for long-term business success.

This isn't some far-off future, either. Big companies are already using AI-driven testing solutions, and many others are experimenting with AI models to get their QA workflows dialed in. AI is changing software testing from a final gatekeeper into a continuous, intelligent process that fuels faster releases and better software.

Driving Down Operational Costs

Finally, let's talk about the direct impact on your budget. The time your team spends on test maintenance is a huge, often hidden, operational cost. By automating the repair of broken tests, AI slashes the hours your engineers waste on tedious, low-value tasks.

Think about the savings here:

- Reduced Maintenance: Drastically cuts the hours spent fixing brittle and outdated test scripts.

- Faster Debugging: AI-powered root cause analysis helps developers pinpoint and fix bugs in a fraction of the time.

- Optimized Resources: Frees up your most skilled engineers to focus on innovation instead of repetitive grunt work.

This combination of speed, quality, and cost-efficiency makes a compelling business case. To learn more about how AI supports these goals, see our article on the role of AI for DevOps. Investing in smarter testing isn't just about finding more bugs; it's about building a more agile, efficient, and profitable organization.

A Practical Roadmap for Implementing AI Testing

Bringing any new technology into your workflow takes a solid plan, and integrating AI in automated testing is certainly no exception. If you just dive in headfirst without a clear strategy, you're setting yourself up for confusion and a lot of wasted effort. The trick is to start small, prove the value, and then scale up intelligently.

Right off the bat, teams often run into a bit of a learning curve. Even though many AI tools are built to be user-friendly, your team still needs time to get comfortable with what the tool can do and figure out how to fit it into their daily routine. Another huge factor is data quality. AI models are only as good as the data they learn from, so having clean, relevant data is non-negotiable for getting accurate test results.

Finally, you can't forget about how it all plugs into your existing setup. If the AI tool doesn't play nice with your CI/CD pipeline, you’ll create more problems than you solve.

Getting Started The Right Way

For a smooth transition, think evolution, not revolution. Don't try to rip and replace everything at once. Instead, start by pinpointing your single biggest testing headache. Are flaky tests driving everyone crazy? Is writing new tests taking forever? Or is your visual testing just not catching enough bugs? Nailing down that one specific problem will help you pick the right tool for that exact job.

Once you have a target, follow these simple steps:

- Run a Pilot Project: Choose a small, low-risk part of your application and try out an AI testing tool there. This gives your team a safe space to learn without the pressure of a major release, and it lets you prove the tool's worth on a manageable scale.

- Pick Tools That Match Your Team's Skills: Look at tools through the lens of your current team. Some platforms are completely codeless, which is great for manual QAs looking to get into automation. Others are built for seasoned engineers who want to dig in and customize everything. For a deeper dive, check out our guide to modern automation testing tools like Playwright.

- Define What Success Looks Like: Before you even start the pilot, decide how you'll measure success. Are you trying to cut down test maintenance time by 25%? Or maybe boost your test coverage by 15%? Having concrete goals makes it easy to see the actual impact and justify the investment later.

Generative AI is making this process even more powerful by autonomously creating test cases and mimicking complex user journeys. It's no wonder the generative AI in testing market is expected to rocket from USD 48.9 million to USD 351.4 million by 2034. You can discover more insights about the generative AI testing market to see where things are headed.

A successful implementation isn’t about replacing your entire testing framework overnight. It's about strategically applying AI to solve specific problems and demonstrating tangible wins, building momentum one step at a time.

Answering Your Questions About AI in Testing

As teams start exploring AI in automated testing, a few key questions always come up. People naturally want to know how it will affect their jobs, which tools are worth looking into, and what all the technical jargon really means. Let's tackle those common questions head-on.

Will AI Replace Human QA Engineers?

No, not at all. The goal of AI isn't to replace QA engineers but to make them more effective. Think of it as a force multiplier.

AI is fantastic at handling the tedious, repetitive tasks that bog down human testers—like running thousands of regression tests or spotting tiny visual glitches across different browsers. By offloading that work, it frees up your team's experts to focus on what humans do best: creative, strategic, and intuitive testing.

Instead of just writing and fixing scripts, QA engineers can now dive deeper into exploratory testing, analyze user experience, and contribute to product strategy. The role simply shifts from a script maintainer to a quality strategist, with AI serving as a powerful assistant.

How Do We Choose the Right AI Testing Tool?

It's easy to get lost in a sea of features. The best way to start is by pinpointing your biggest headache. Are flaky tests constantly breaking your builds? Is test creation taking forever? Or is UI validation a constant struggle? Find the tools that are laser-focused on solving that problem first.

Next, think about your team. Do you have manual testers who would benefit from a codeless platform? Or are your engineers looking for something they can customize and extend with code? Your team's existing skill set should guide your choice.

Finally, any tool you choose must play nicely with your current CI/CD pipeline. Before you go all-in, run a small pilot project. There's no better way to see how a tool really performs than by testing it in your own environment.

The best tool isn't the one with the most features; it's the one that solves your team's most pressing problem most effectively.

What Is the Difference Between AI and ML in Testing?

It's easy to use these terms interchangeably, but there's a simple way to think about them. Artificial Intelligence (AI) is the broad concept of creating smart systems that can perform tasks that typically require human intelligence. Machine Learning (ML) is the engine that makes much of that possible.

Think of it this way: AI is the goal—to make testing smarter. ML is how we get there.

For instance, an ML algorithm can analyze past test runs and code commits to predict which areas are most at risk for new bugs. That's a form of predictive analytics powered by ML. Another ML model can visually learn your app's layout, allowing it to automatically fix broken tests when a button's location or label changes. That's the magic behind self-healing tests.

In short, ML gives the system the ability to learn from data, which is what makes AI-powered testing so intelligent and adaptive.

Ready to make your CI/CD pipeline smarter and more efficient? Mergify uses intelligent automation to reduce CI costs, secure your code, and eliminate merge conflicts. See how Mergify can help your team ship faster and more reliably.