Top 8 Test Environment Management Best Practices for 2025

In the fast-paced world of software development, the stability and reliability of your test environments directly impact release velocity and code quality. An inconsistent, poorly configured, or unavailable environment can introduce flaky tests, create frustrating delays for developers, and ultimately undermine the entire CI/CD pipeline. As applications grow in complexity, distributed across microservices and cloud infrastructure, managing these critical testing grounds becomes a significant bottleneck, demanding more than just ad-hoc solutions.

This is where a strategic approach becomes essential. Implementing a robust set of test environment management best practices is no longer a luxury for mature organizations; it's a foundational requirement for any team serious about continuous delivery. By establishing systematic processes for provisioning, data handling, and maintenance, engineering teams can create a predictable and resilient foundation for quality assurance. This ensures that every test run, from a local developer machine to a pre-production staging area, is consistent, repeatable, and trustworthy.

This guide moves beyond theory to provide a definitive blueprint for achieving that stability. We will dive into eight specific, actionable best practices that directly address the most common challenges teams face. From automating environment creation with Infrastructure as Code to implementing sophisticated test data management and optimizing cloud-native setups, you will learn how to:

- Eliminate configuration drift and ensure environment consistency.

- Accelerate feedback loops for developers and QA engineers.

- Optimize resource utilization and reduce infrastructure costs.

- Enhance security within your non-production environments.

By mastering these techniques, your team can transform test environments from a source of friction into a strategic asset, empowering you to ship higher-quality software with greater speed and confidence.

1. Environment Provisioning Automation

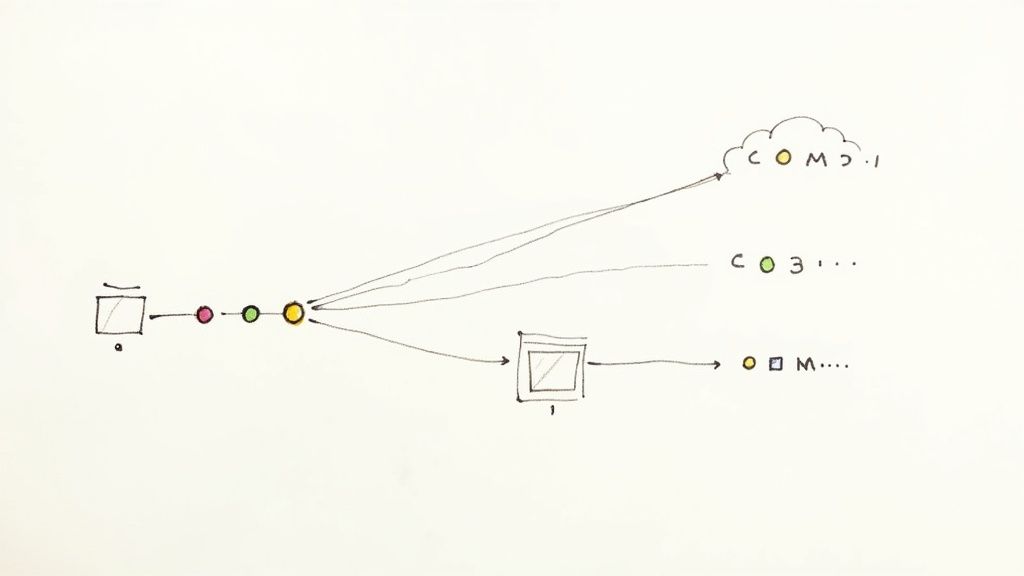

Automated environment provisioning is the practice of using Infrastructure as Code (IaC) and automation scripts to create, configure, and deploy test environments on demand. This approach codifies the environment's entire configuration, from virtual machines and networking rules to application dependencies and service integrations, into version-controlled files. By doing so, it replaces slow, error-prone manual setups with a repeatable, predictable, and rapid process, which is foundational to modern test environment management best practices.

This method ensures that every environment, whether for a developer's local testing or a complex integration stage, is an identical clone. The primary benefit is the elimination of "it works on my machine" issues, as environment drift becomes a thing of the past. Companies like Netflix leverage this at scale, using AWS and their open-source platform, Spinnaker, to provision consistent testing environments for thousands of microservices daily. Similarly, Spotify utilizes Kubernetes and Helm charts to define and deploy entire application stacks into isolated environments with a single command.

Key Benefits and Implementation

Adopting automated provisioning delivers significant advantages. It dramatically reduces lead time for new environments from days or weeks to mere minutes. It also enhances consistency across all stages of the software development lifecycle, leading to more reliable test results and fewer deployment failures. Furthermore, it enables cost optimization through ephemeral, on-demand environments that are created for a specific task and torn down immediately after, preventing resource waste.

For effective implementation, consider these actionable steps:

- Start Small and Iterate: Begin by automating a simple, single-service environment. Gradually expand the automation scripts to include more complex, multi-tiered applications as your team gains proficiency.

- Leverage Containerization: Use technologies like Docker and Kubernetes to create lightweight, portable, and isolated environments. Containers encapsulate application code and dependencies, making provisioning exceptionally fast and consistent across any infrastructure.

- Version Control Everything: Treat your IaC scripts (e.g., Terraform, CloudFormation) and configuration files (e.g., Ansible playbooks) as you would your application code. Store them in a Git repository to track changes, enable collaboration, and facilitate rollbacks.

- Create Reusable Templates: Develop standardized templates for common environment configurations. This allows teams to self-serve new environments quickly without starting from scratch, promoting both speed and governance.

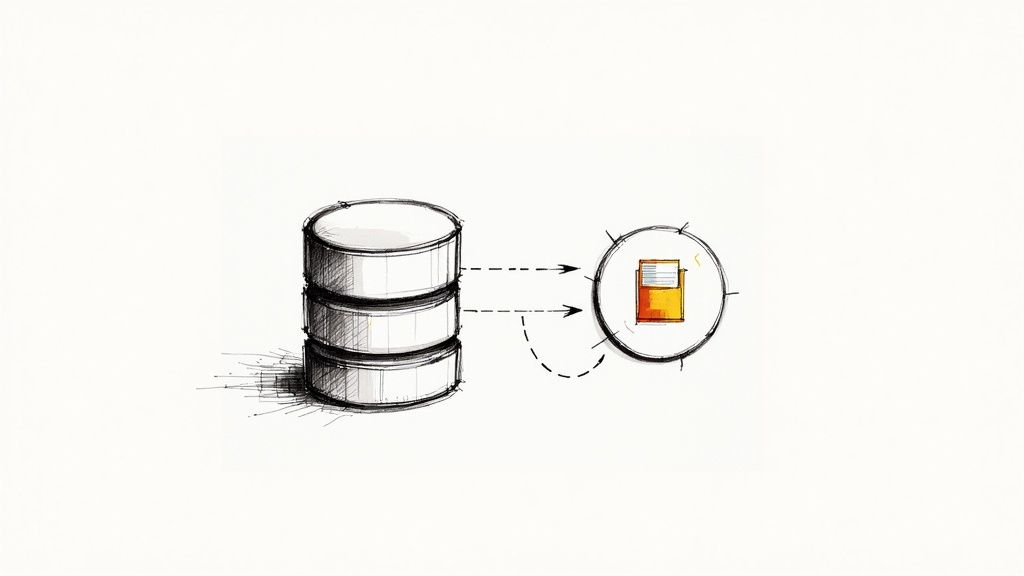

2. Test Data Management and Masking

Test data management (TDM) is the systematic process of creating, managing, and securing non-production data for testing purposes. It ensures that testing activities use data that is realistic, relevant, and secure, which is a critical component of effective test environment management best practices. This practice involves techniques like data subsetting, synthetic data generation, and data masking to protect sensitive information while providing developers and QA teams with high-quality data for accurate testing.

Without proper TDM, teams often resort to using sanitized production data, which can expose sensitive information and violate privacy regulations like GDPR or HIPAA. Leading organizations have integrated robust TDM strategies to mitigate these risks. For instance, financial institutions like JPMorgan Chase use advanced data masking to test transaction systems without exposing real customer financial data. Similarly, e-commerce giants like eBay leverage synthetic data generation to simulate diverse user behaviors and test new features at scale, ensuring application reliability without compromising user privacy.

Key Benefits and Implementation

Effective test data management improves test accuracy by providing realistic data that covers a wide range of scenarios, including edge cases. It significantly enhances security and compliance by preventing sensitive data breaches in lower environments. Furthermore, it accelerates testing cycles by enabling on-demand access to appropriate and compliant test data, removing a common bottleneck for development and QA teams. When implementing test data management, it's crucial to consider broader data protection principles to ensure comprehensive compliance and security.

For successful implementation, consider these actionable steps:

- Identify and Classify Sensitive Data: Begin by cataloging all data elements within your applications and classifying them based on sensitivity (e.g., PII, PHI, financial). This inventory is the foundation for your masking strategy.

- Implement Consistent Masking Rules: Define and apply standardized data masking rules across all non-production environments. This ensures that a masked value in one environment remains consistent in another, preserving referential integrity for integrated tests.

- Use Data Subsetting and Synthesis: Instead of cloning entire production databases, use subsetting to create smaller, targeted datasets that are faster to provision and cheaper to store. Supplement these with synthetically generated data to cover scenarios not present in the subset.

- Establish a Data Refresh Cadence: Define a schedule for refreshing test data to keep it relevant to the current production state. Align this schedule with your release cycles to ensure tests are always run against fresh, pertinent data.

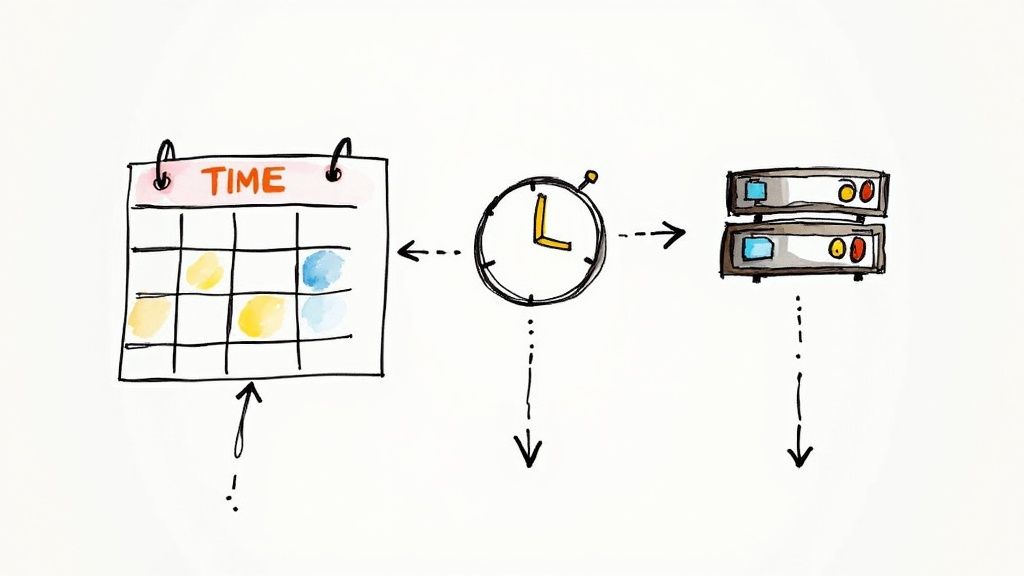

3. Environment Scheduling and Resource Optimization

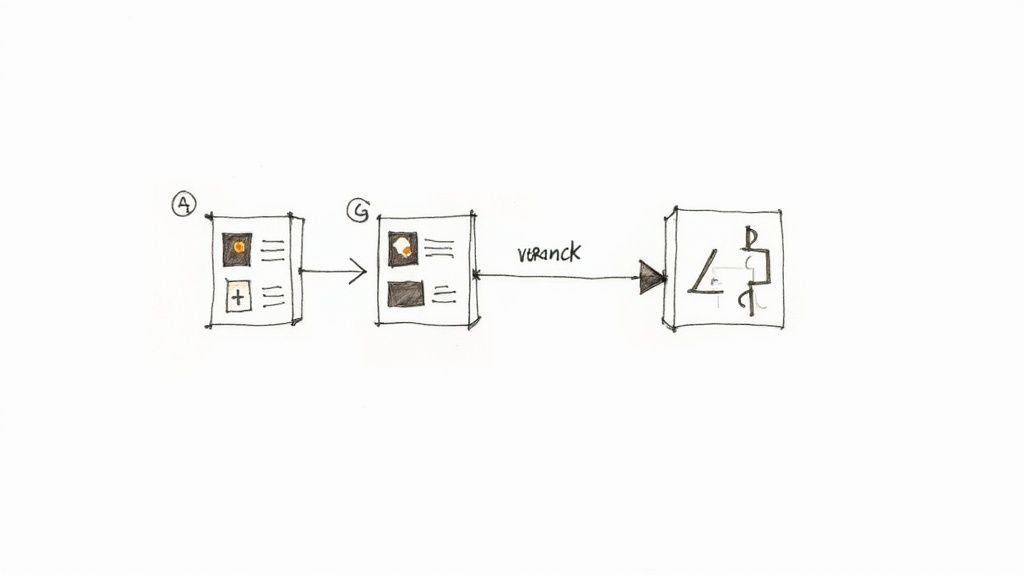

Environment scheduling and resource optimization involve implementing intelligent systems to allocate and manage test environments effectively. This practice moves away from static, always-on environments to a dynamic model where resources are booked, used, and released based on team needs. By applying time-based scheduling, resource pooling, and dynamic allocation, organizations can maximize the utilization of expensive infrastructure, prevent booking conflicts, and significantly reduce operational costs, making it a crucial component of modern test environment management best practices.

This approach ensures that critical and often limited resources, like performance testing rigs or fully integrated staging environments, are used to their full potential. For example, Microsoft employs sophisticated environment scheduling within Azure DevOps, allowing thousands of engineers to reserve specific testing configurations without conflict. Similarly, Airbnb uses dynamic resource allocation to spin up and schedule isolated environments for its microservices, ensuring that testing can proceed in parallel without teams waiting for shared resources. These strategies prevent bottlenecks and idle infrastructure.

Key Benefits and Implementation

Adopting environment scheduling yields substantial benefits. It provides clear visibility into environment availability and usage, which helps in planning and forecasting. This practice dramatically improves resource utilization, cutting down on cloud hosting bills and hardware expenses by ensuring environments are not sitting idle. Most importantly, it reduces contention among teams, eliminating the "who is using this environment?" problem and streamlining the entire testing workflow.

For effective implementation, consider these actionable steps:

- Implement Self-Service Booking Interfaces: Provide teams with a user-friendly portal or calendar-based system to view availability and reserve environments. This empowers developers and QA engineers while centralizing management.

- Use Historical Data for Prediction: Analyze past usage patterns to predict peak demand periods. Use this data to proactively scale resources or adjust scheduling policies to meet anticipated needs.

- Set Up Automated Notifications: Configure automated alerts to notify teams when their reserved environment is ready, when their booking is about to expire, and when a previously booked environment becomes free.

- Establish Clear Governance Policies: Define rules for booking duration, extensions, and emergency access. This ensures fairness and prevents a single team from monopolizing critical resources for extended periods.

4. Configuration Management and Version Control

Configuration management is the discipline of systematically handling changes to a system’s configuration so that it maintains its integrity over time. In the context of test environment management best practices, it involves codifying, versioning, and consistently applying all environment settings, dependencies, and application parameters. By treating configuration as code, teams can prevent environment drift, ensure reproducibility, and trace every change back to its source, which is critical for stable and reliable testing.

This approach ensures that the configuration of a production environment can be perfectly replicated in staging, QA, or development environments. For example, Google uses its internal configuration management tools alongside Git to manage settings across its vast infrastructure, ensuring consistency at scale. Similarly, companies rely on tools like Chef, popularized by Chef Software, to define system configurations in "cookbooks" that can be applied automatically, guaranteeing every server is configured identically and preventing manual errors.

Key Benefits and Implementation

Adopting a robust configuration management strategy provides several key advantages. It increases reliability by ensuring environments are built to exact specifications every time, eliminating configuration-related bugs. It also enhances security and compliance by allowing teams to enforce policies, track changes for audits, and quickly remediate vulnerabilities across all environments. Finally, it improves efficiency by automating tedious setup tasks and enabling developers to self-serve compliant environments.

For effective implementation, consider these actionable steps:

- Utilize Configuration Management Tools: Adopt industry-standard tools like Ansible, Puppet, or Chef. These platforms provide a declarative language to define the desired state of your systems, and they handle the logic of applying and maintaining that state.

- Version Control All Configurations: Store all configuration files, scripts, and templates in a version control system like Git. This creates an auditable history of all changes and is a foundational concept in modern code management.

- Implement a Peer Review Process: Treat configuration changes like code changes. Require pull requests and peer reviews before merging any modifications to ensure they are vetted for accuracy, security, and potential impact.

- Automate Validation and Compliance: Integrate automated checks into your CI/CD pipeline to validate that deployed environments conform to their defined configurations. Tools like AWS Config can continuously monitor and report on configuration compliance.

5. Environment Monitoring and Health Checks

Comprehensive environment monitoring and health checking is the practice of implementing automated systems to continuously track the performance, availability, and overall health of test environments. This proactive approach ensures that environments are stable and functioning as expected, preventing test failures caused by underlying infrastructure issues rather than application code defects. By collecting and analyzing metrics in real-time, teams can detect anomalies, diagnose problems, and resolve issues before they impact development and testing workflows, making it a critical component of modern test environment management best practices.

This strategy moves teams from a reactive to a proactive stance on environment stability. For instance, Uber leverages a powerful combination of Prometheus for data collection and Grafana for visualization to monitor its vast testing infrastructure, enabling engineers to quickly pinpoint performance bottlenecks. Similarly, PayPal utilizes APM solutions like New Relic to create custom dashboards that provide deep visibility into service health, ensuring their complex financial transaction environments are always ready for rigorous testing. This level of oversight guarantees that test results are a true reflection of code quality, not a symptom of a faulty environment.

Key Benefits and Implementation

Adopting robust monitoring and health checks delivers immediate and significant value. It increases test reliability by ensuring the underlying environment is stable, leading to fewer false negatives in test runs. It also reduces downtime by enabling proactive issue detection and automated remediation, keeping development velocity high. Furthermore, it provides valuable performance insights that can be used to optimize both the application and the environment configuration for better efficiency and resource utilization.

For effective implementation, consider these actionable steps:

- Define Clear KPIs: Establish key performance indicators (KPIs) and service-level agreements (SLAs) for environment uptime and performance. Metrics like CPU/memory utilization, network latency, and application error rates should be baseline requirements.

- Implement Layered Monitoring: Combine infrastructure-level monitoring (e.g., server health, disk space) with application-level monitoring (APM) to get a complete picture. To truly master environment monitoring, incorporating proven network monitoring best practices is crucial for proactive issue detection.

- Automate Remediation: Configure your monitoring system to trigger automated actions for common, predictable issues. For example, automatically restart a hung service or scale resources in response to a CPU spike to maintain environment stability without manual intervention.

- Create Role-Specific Dashboards: Build customized dashboards in tools like Grafana or Datadog that provide relevant views for different stakeholders, such as developers, QA engineers, and DevOps teams. This ensures everyone has quick access to the information they need. For a deeper dive, you can learn more about the essentials of infrastructure monitoring.

6. Environment Lifecycle Management

Environment lifecycle management is the practice of governing the entire lifespan of a test environment, from its initial request and creation to its eventual decommissioning. This comprehensive approach involves defining clear policies, implementing automated cleanup processes, and tracking resource usage to prevent environment sprawl and control costs. By treating environments as assets with a defined lifecycle, organizations can ensure efficient resource utilization, maintain a clean inventory, and improve overall operational governance.

This discipline moves teams away from the "create and forget" mentality that leads to bloated, underutilized infrastructure. For instance, Deutsche Bank employs strict governance and automated lifecycle policies for its financial services testing environments to meet stringent compliance and security requirements. Similarly, General Electric automates the lifecycle of its industrial IoT test environments, spinning them up for specific simulation runs and tearing them down afterward to manage the massive scale of their testing operations. This structured approach is a core component of effective test environment management best practices.

Key Benefits and Implementation

Adopting a formal lifecycle management strategy delivers crucial operational and financial benefits. It significantly reduces cloud waste by automatically decommissioning idle or abandoned environments, leading to direct cost savings. It also improves security posture by minimizing the number of active, unmonitored environments that could become attack vectors. Furthermore, it enhances resource availability by freeing up capacity that would otherwise be locked in zombie environments, ensuring teams have what they need when they need it. To learn more about this, you can read about managing test environments on articles.mergify.com.

For effective implementation, consider these actionable steps:

- Define and Communicate Policies: Establish clear policies for environment ownership, lifespan, and renewal processes. Document these rules in a central wiki and communicate them widely to ensure all teams understand their responsibilities.

- Implement Automated Cleanup and Notifications: Use scripts or platform features to automatically detect and decommission expired environments. Send automated notifications to owners before expiration to give them a chance to extend the environment's life if needed.

- Use Tagging for Cost Allocation: Implement a mandatory tagging strategy for all environments, capturing details like owner, team, project, and creation date. Use these tags to track costs, generate reports, and enforce accountability.

- Establish Approval Workflows: For environments that need to exist longer than the standard policy allows, create a formal approval workflow. This ensures that long-term environments are justified and regularly reviewed.

7. Containerization and Microservices Testing

Containerization is the practice of packaging an application and its dependencies into a standardized unit called a container. This approach, using technologies like Docker and orchestration platforms like Kubernetes, creates lightweight, isolated, and portable environments. For test environment management best practices, containerization is a game-changer, especially for modern microservices architectures where applications consist of many independent, deployable services. It enables the creation of complete, self-contained test environments on demand, ensuring consistency from local development to production.

This method allows teams to spin up an entire application stack, including all its microservices and dependencies, within seconds. Netflix famously leverages Docker and its own container management platform to test its complex microservices architecture, enabling thousands of daily deployments. Similarly, Airbnb uses container-based environments to ensure its service-oriented architecture can be tested reliably and at scale. These examples demonstrate how containerization directly addresses the challenges of testing distributed systems by making environment setup rapid, repeatable, and resource-efficient.

Key Benefits and Implementation

Adopting containerization provides profound advantages for testing complex applications. It increases portability, allowing identical environments to run on a developer’s laptop, a CI/CD server, or a cloud platform. This improves isolation, as each container runs in its own sandboxed environment, preventing conflicts between dependencies and services. Most importantly, it accelerates testing cycles by dramatically reducing the time needed to provision and tear down complex, multi-service environments.

For effective implementation, consider these actionable steps:

- Start with Stateless Applications: Begin your containerization journey with stateless services. These are easier to manage and scale, providing a solid foundation before you tackle more complex stateful services like databases.

- Establish Image Governance: Implement strict container image versioning and use a private registry to store and manage your images. Integrate security scanning tools to check for vulnerabilities in your base images and dependencies.

- Use Service Discovery: In a dynamic microservices environment, services need to find and communicate with each other. Implement a service discovery mechanism (e.g., CoreDNS in Kubernetes) to handle dynamic IP addresses and ports.

- Monitor and Optimize Resources: Use container monitoring tools to track CPU, memory, and network usage. This data helps optimize resource allocation, prevent performance bottlenecks, and control costs in your test environments.

8. Cloud-Native Environment Management

Cloud-native environment management leverages cloud services, serverless architectures, and managed platforms to build dynamic, scalable, and cost-effective test environments. Instead of replicating on-premises hardware, this approach embraces the unique capabilities of the cloud, such as elasticity, pay-as-you-go pricing, and a vast ecosystem of managed services. It allows teams to provision complex, production-like environments on-demand, using the cloud provider's infrastructure as the backbone for all testing activities, a core tenet of modern test environment management best practices.

This methodology enables organizations to move beyond static, long-lived test servers to ephemeral infrastructure that perfectly matches the scale and complexity of the application under test. Capital One, for instance, migrated its entire testing infrastructure to Amazon Web Services (AWS), utilizing cloud-native tools to achieve greater agility and security. Similarly, Netflix's groundbreaking microservices architecture is built entirely on AWS, where its testing strategy relies on creating isolated cloud-native environments to validate individual service changes without disrupting the entire system.

Key Benefits and Implementation

Adopting a cloud-native approach offers transformative benefits. It provides unparalleled scalability, allowing teams to spin up massive environments for performance testing and then scale them down to zero to save costs. It also promotes cost efficiency by shifting from a capital expenditure model (buying hardware) to an operational expenditure model (paying only for resources used). Furthermore, it accelerates innovation by giving developers access to cutting-edge managed services like serverless functions, managed databases, and AI/ML APIs for more sophisticated testing scenarios.

For effective implementation, consider these actionable steps:

- Leverage Managed Services: Instead of self-hosting databases, message queues, or caches, use managed cloud services like Amazon RDS, Google Cloud Pub/Sub, or Azure Cosmos DB. This reduces operational overhead and ensures your test environment mirrors the production setup.

- Implement Robust Cost Monitoring: Utilize cloud provider tools like AWS Cost Explorer or Azure Cost Management to track spending. Set up budgets, alerts, and automated shutdown policies for idle environments to prevent unexpected bills.

- Codify Cloud Resources: Use Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation to define and manage all cloud resources. This ensures your cloud-native environments are reproducible, version-controlled, and easy to tear down.

- Design for Elasticity: Build your test environments to take advantage of cloud elasticity. Use auto-scaling groups for virtual machines or leverage serverless platforms like AWS Lambda or Azure Functions that automatically scale with demand, ensuring you only pay for compute time you actually use.

Best Practices Comparison Matrix for Test Environment Management

| Aspect | Environment Provisioning Automation | Test Data Management and Masking | Environment Scheduling and Resource Optimization | Configuration Management and Version Control | Environment Monitoring and Health Checks | Environment Lifecycle Management | Containerization and Microservices Testing | Cloud-Native Environment Management |

|---|---|---|---|---|---|---|---|---|

| Implementation Complexity 🔄 | High - Requires skilled DevOps and tooling investment | High - Complex data relationships and masking rules | Medium - Scheduling algorithms and team discipline | Medium - Needs discipline and thorough config analysis | Medium - Monitoring tools setup and tuning required | Medium - Governance and policy enforcement needed | High - Container expertise and networking challenges | High - Cloud architecture knowledge and vendor management |

| Resource Requirements ⚡ | Moderate - Automation tools and licensing costs | Moderate - Data storage and transformation resources | Low - Scheduling systems and monitoring | Low - Configuration tools and version control | Moderate - Monitoring infrastructure and alerting systems | Low to Moderate - Policy automation tools and auditing | Moderate - Container platforms and orchestration tools | Moderate - Cloud services, serverless and multi-cloud usage |

| Expected Outcomes 📊 | ⭐⭐⭐⭐⭐ Rapid, consistent environment setup; reduces errors | ⭐⭐⭐⭐ Ensures compliance & realistic datasets; privacy protection | ⭐⭐⭐⭐ Optimized resource use; fewer conflicts | ⭐⭐⭐⭐ Consistent configs; quick rollback; audit trails | ⭐⭐⭐⭐ Proactive issue detection; improved uptime | ⭐⭐⭐⭐ Controls sprawl; cost visibility and security | ⭐⭐⭐⭐ Fast provisioning; supports microservices; consistent env | ⭐⭐⭐⭐⭐ Scalable, cost-effective, globally distributed environments |

| Ideal Use Cases 💡 | Large teams needing repeatable, scalable environment setups | Industries handling sensitive data needing compliance | Organizations with shared resources and multiple test teams | Projects with complex configs requiring strict control | Environments needing high reliability and continuous monitoring | Organizations managing many environments with cost control | Microservices architectures; rapid CI/CD testing | Cloud-first organizations requiring elastic and managed testing |

| Key Advantages ⭐ | Consistency, speed, reduced manual errors | Data privacy, realistic testing, compliance | Infrastructure ROI, reduced wait times | Configuration consistency, rollback ability | Proactive maintenance, capacity planning | Environment governance, cost & risk management | Lightweight, portable, scalable environments | Elastic scalability, pay-per-use, managed cloud services |

Elevating Your Development Workflow with Intelligent Automation

Navigating the complexities of modern software development requires more than just skilled engineers; it demands a robust, reliable, and efficient ecosystem supporting the entire delivery pipeline. Throughout this guide, we've explored the foundational pillars of superior test environment management, moving from the reactive and chaotic to the proactive and predictable. The journey from manual setups to automated, observable systems is not merely an operational tweak but a fundamental strategic shift.

By embracing the test environment management best practices detailed in this article, you are directly investing in your team's velocity, your product's quality, and your organization's competitive edge. Each practice, from automated provisioning and sophisticated test data management to containerization and cloud-native strategies, works in concert to eliminate friction, reduce waste, and amplify developer productivity. You are building a system where clean, consistent, and production-like environments are the default, not the exception.

From Theory to Tangible Impact

The true value of mastering these principles lies in transforming your software development lifecycle (SDLC) into a well-oiled machine. Consider the cumulative effect:

- Accelerated Feedback Loops: Developers no longer wait hours or days for a usable environment. With automation and self-service provisioning, they receive feedback on their code in minutes, enabling rapid iteration and innovation.

- Drastically Reduced "It Works on My Machine" Issues: By enforcing configuration as code and versioning every aspect of the environment, you ensure consistency across all stages, from local development to production. This eradicates a notorious source of bugs and deployment failures.

- Enhanced Security Posture: Integrating practices like data masking and role-based access control directly into your environment management strategy hardens your pre-production stages, preventing sensitive data leaks and ensuring compliance.

- Significant Cost Optimization: Intelligent scheduling, resource monitoring, and automated teardown of ephemeral environments prevent resource sprawl and ensure you only pay for the infrastructure you actively use, a critical advantage in cloud-based development.

Your Actionable Path Forward

Adopting these test environment management best practices is a progressive journey, not an overnight overhaul. The key is to start small, demonstrate value, and build momentum. Begin by identifying the most significant bottleneck in your current process. Is it the time it takes to provision a new environment? Or perhaps the constant stream of bugs caused by configuration drift?

Tackle that one problem first. Implement a basic automation script for provisioning, or place your environment configurations under version control using a tool like Ansible or Terraform. As your team experiences the benefits firsthand, you will build the cultural and technical foundation needed to tackle more advanced practices like containerization with Docker and Kubernetes or implementing comprehensive monitoring with tools like Prometheus and Grafana.

Ultimately, the goal is to create a seamless, self-sustaining system where high-quality test environments are an on-demand, reliable utility. This empowers your teams to focus their creative energy on building exceptional software, confident that the underlying infrastructure is stable, secure, and efficient. By methodically implementing these strategies, you are not just managing environments; you are engineering a superior development experience that fosters innovation and accelerates delivery.

Ready to eliminate CI bottlenecks and automate your merge process? Mergify integrates seamlessly with your version control system to create an intelligent merge queue, ensuring your test environments are always validating a stable, up-to-date codebase. Start optimizing your development workflow today by exploring Mergify and see how automated pull request management can supercharge your team's productivity.